|

|

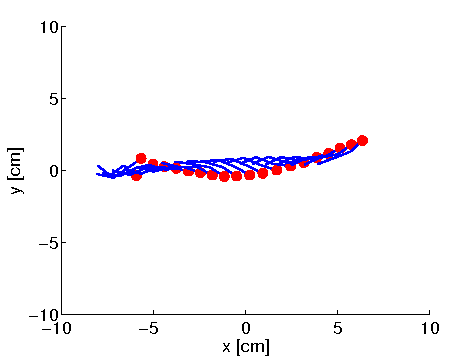

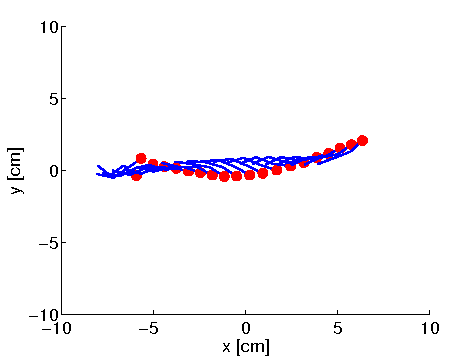

In this task you have to learn optimal policies for the swimmer (see Figure 3) using different policy gradient methods.

You have to compare three algorithms to compute the gradient

![]() , namely Finite Differences, Likelihood Ratio and the Natural Policy Gradient. The robot is a 3-link (2-joints) snake-like robot swimming in the water. He has two actuators, you have to learn how to use these actuators to swim as fast as possible in a given direction. The model, the policy and the reward function are already given in the provided matlab package swimmer.zip7.

The used policy is a stochastic Gaussian policy implemented by a Dynamic Movement Primitive (DMP). The DMP uses 6 centers per joint. As we deal with a periodic movement the phase variable

, namely Finite Differences, Likelihood Ratio and the Natural Policy Gradient. The robot is a 3-link (2-joints) snake-like robot swimming in the water. He has two actuators, you have to learn how to use these actuators to swim as fast as possible in a given direction. The model, the policy and the reward function are already given in the provided matlab package swimmer.zip7.

The used policy is a stochastic Gaussian policy implemented by a Dynamic Movement Primitive (DMP). The DMP uses 6 centers per joint. As we deal with a periodic movement the phase variable ![]() of the DMP is also periodic. The policy itself is a stochastic policy which adds noise to the velocity variable of the DMP, i.e.

of the DMP is also periodic. The policy itself is a stochastic policy which adds noise to the velocity variable of the DMP, i.e.

where